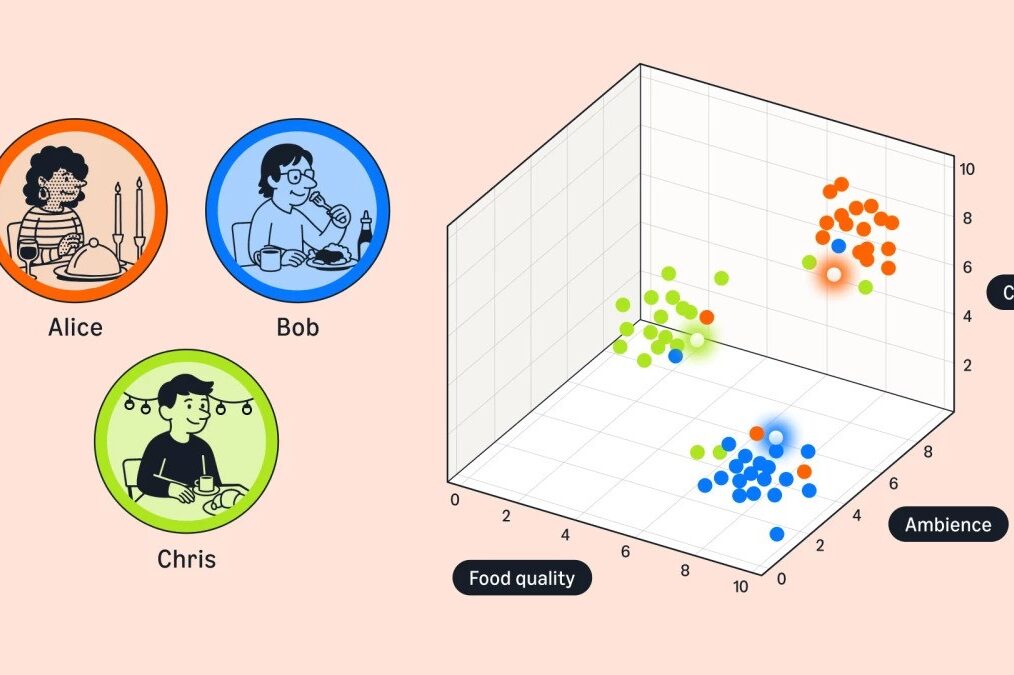

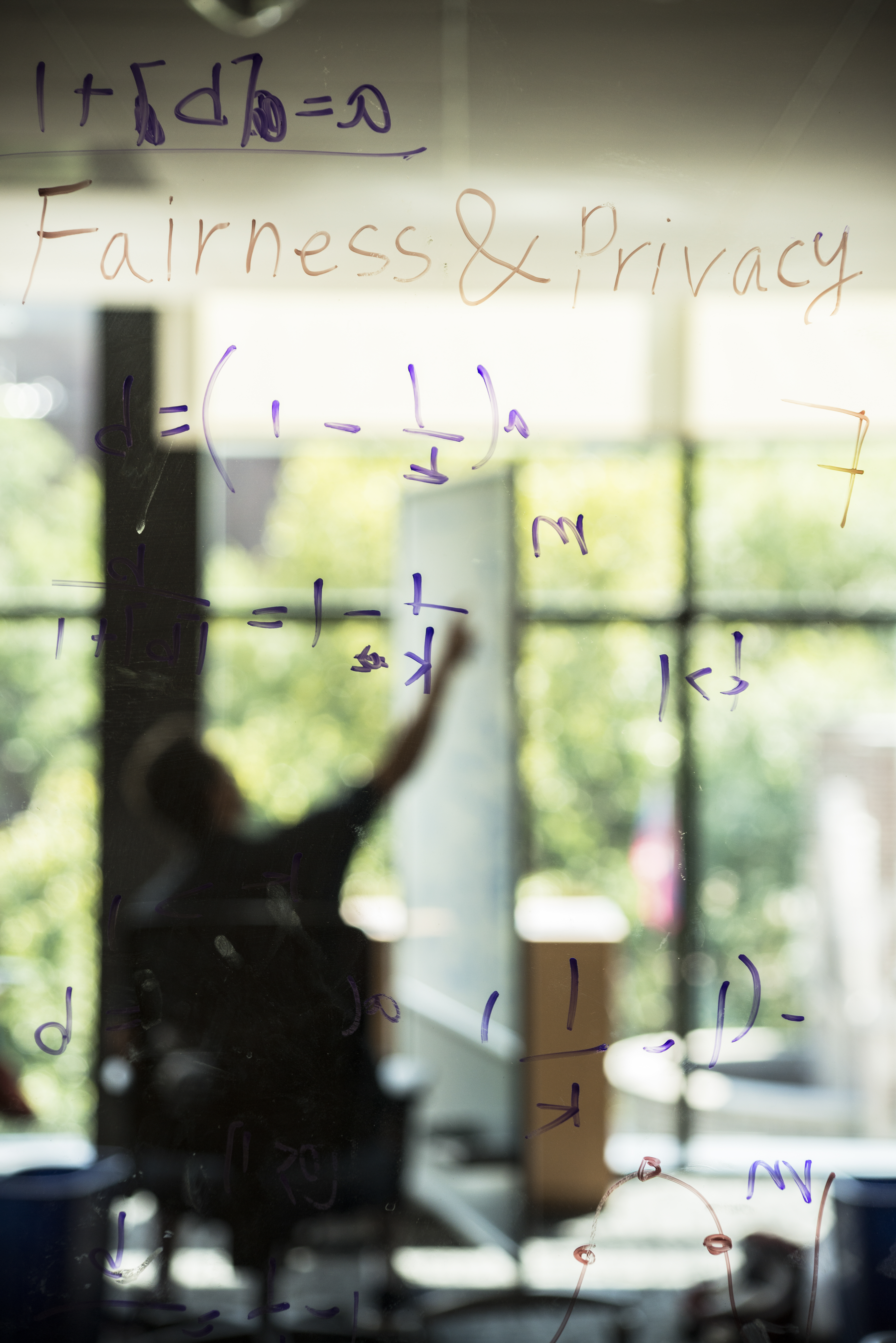

It has been argued that automation, by removing the human element, guarantees fairness — or at least gives us the ability to transparently evaluate fairness by examining the algorithm’s code. Recent empirical research, however, demonstrates that automation is no panacea, and does not prevent “unfair“ discrimination. Moreover, the reasons for this unfairness can be complex and non-obvious. This research initiative investigates how social norms, such as privacy, fairness and security, can be introduced into algorithms at the level of the code itself.

Warren Center faculty affiliates from Arts & Sciences, Engineering, Law and Wharton have been delving deeply into these issues. Their collaborations on the implications of the widespread use of algorithms have resulted in many successful publications, and workshops have provided a platform for them to share their findings. The Warren Center has helped facilitate these connections by organizing and sponsoring events to encourage further research into the ways privacy, fairness and security are affected by technological advances.